Assignment 7 - Introduction to Ray Tracing

Part 1 Due Wednesday Dec. 4, 2019 at 3:00 PM

Part 2 Due Wednesday Dec. 11, 2019 at 3:00 PM, no

extensions

Overview

So far, all of our work in this course has been with polygonal models, which can be tidied up by shading methods such as the Phong model, but are fundamentally an approximation of some exact surface. Furthermore, we have not discussed dealing with shadows, reflections, or refractions through materials such as glass while using a tool such as OpenGL or our own shader program. One common technique to tackle all of these issues at once, at the sacrifice of real-time speed, is what’s known as ray tracing.

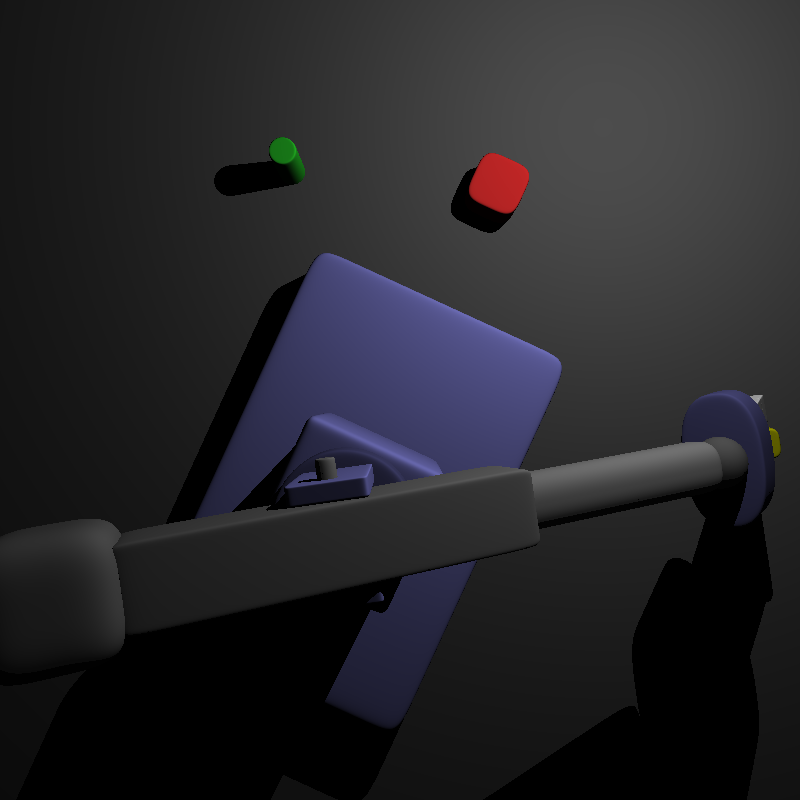

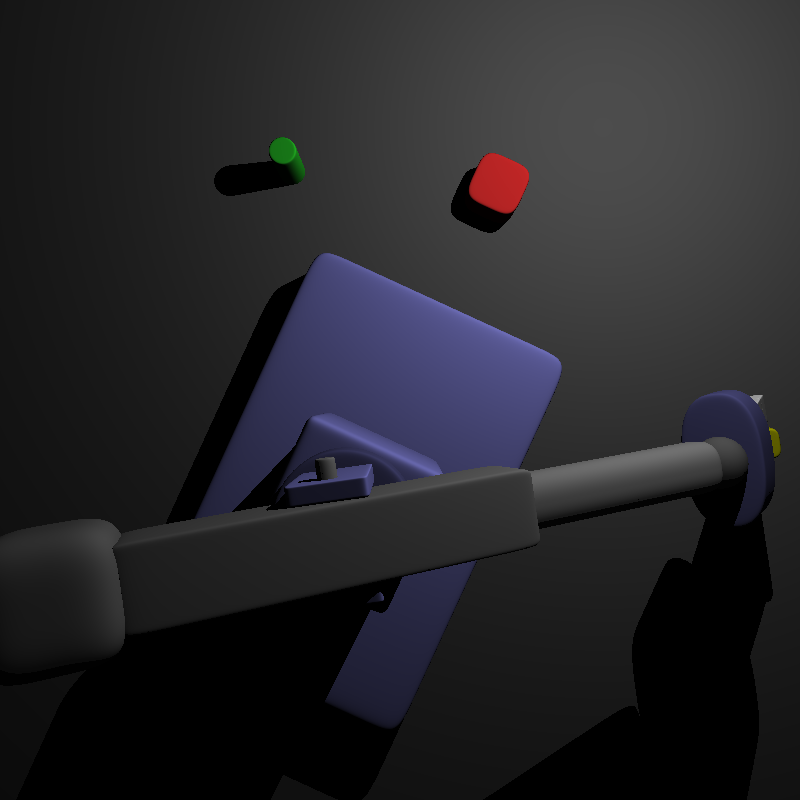

As the name implies, this involves tracing the path that a ray of light follows to the camera as it interacts with different objects in the scene, starting from the camera itself. To determine where the ray interacts with a surface, we need a way to express it mathematically so that we can solve explicitly or at least approximately for their point(s) of intersection. While it’s possible to do this for the interpolated smooth surface of tessellated models, it turns out to be easier to use implicitly defined surfaces. Specifically, we will be asking you to ray trace scenes composed of certain type of shapes called superquadrics, along with various light sources.

To help you with this, we have provided a modeling program composed of a command line and a viewer. The former can be used to manually build scenes out of superquadric primitives or load files specifying a series of modeling commands, while the latter allows you to view the current scene, and explore it through an arcball interface and other commands. The end goal of this assignment is to traverse the data structure provided to store objects and render the scene using ray tracing. The assignment is broken into two parts:

the first which you will be focusing on becoming familiar with the necessary math to complete the task, and the second will be focusing on actually ray tracing the scene.

Before you begin, you may want to review the assignment material with these lecture notes, and review how to use the program we provide with these specification notes. The files for this assignment can be downloaded here. More in-depth details for the assignment will be given in the sections below.

Renderer Controls

Since we are providing you with a renderer for this assignment, the keyboard controls for the renderer are as follows:

Your Assignment: Part 1 (50 pts)

The first part of this assignment focuses on helping you get a better grasp of the renderer and the math that we will be using to ray trace a scene. There are two main tasks that you need to complete for this partare as follows and should be completed in Assignment.cpp:

In order to intersect this vector with a superquadric, you must test if it hits each superquadric in the scene, and choose the intersection (if there is one) closest to the camera. Then, your function should use glBegin(GL_LINES), glEnd(), and glVertex3f() to draw a vector of length 1 starting from the intersection point and pointing in the direction of the surface normal there. Instances of the Primitive class provide a getNormal() function, which takes an Eigen::Vector3f representing a point on the superquadric’s surface. The function assumes that the superquadric is centered at zero, but does account for the superquadric’s own scaling. As a result, if you have, say, a superquadric sphere of radius 2, you should pass the getNormal() function a vector such as (0, 2, 0) rather than the unscaled (0, 1, 0), which isn’t on its surface.

As with drawIOTest() function, don’t forget to apply each superquadric’s inverse transformations to your ray. Additionally, the arcball rotation does not affect the location of the camera or light structs, just how they appear in the viewer, so you can still use it to rotate the scene and check that the normal vector you draw looks correct.

Your Assignment: Part 2 (50 pts)

For this part of the assignment, you must provide an implementation of the raytrace() function, in Assignment.cpp. This function should send a ray out from the camera through each pixel in a grid of arbitrary size, and compute the lighting at its first intersection with a superquadric. The point breakdown is as follows:

Since the raytracing function may take some time to complete, we require that you also provide images that you have raytraced in your submission. We will still verify and run your code, having a preliminary image helps a lot in verifying what’s wrong and helping in providing you helpful feedback.

To make this a bit less of a chore, we’ve provided a PNGMaker class to store and manipulate the grid of pixels composing your image, in addition to automatically writing the result to a file called rt.png in whatever directory you ran the program from, once the raytrace() function returns. You’ll need to make sure the libpng library is installed, which you can do on Ubuntu with

sudo apt-get install libpng-dev

You can set the color of pixel using the setPixel() method, which takes two ints representing the and indices of the pixel, and three floats in the range representing the red, green, and blue intensities for the pixel. The version of raytrace() provided contains a simple example of its usage, looping through the entire grid and coloring it entirely white. Note that because the lecture note derivation of the ray equation assumes to be in the lower left corner of the screen, so does the PNGMaker class.

The size of the output image is currently set to 250x250 using a couple of defines at the top of the file, but you are free to set the resolution to whatever you desire. Just be aware that ray tracing takes a very long time, so you’ll probably want to test your code with smaller images before.

The raytrace() function takes as input two variables: a pointer to the active Camera struct, and a pointer to the singleton Scene instance, which contains all of the information about the objects and primitives in the scene, in addition to a vector called lights which contains all of the lights in the scene as PointLight structs. Camera is defined at the top of UI.hpp and UI.cpp, while PointLight is defined at the top of Scene.hpp and Scene.cpp, and both should be pretty self-explanatory in terms of their members and methods. The tree of objects will be familiar from part a, although everything’s now a member of a static singleton instance of the Scene rather than a static member of the class itself (in other words, you have to use scene->member to access something, instead of Scene::member.

Finally, because the ray tracing process can take such a long time, we spawn it in a separate thread that runs in the background while the rest of the program continues executing as usual. As a result, if you were to simply use the scene’s data structures in your tracer, they could potentially be altered through the command line during the middle of the raytrace() function. As a result, it received copies of the Camera and Scene structs used throughout the rest of the program.

What to Submit

Before submitting, please comment your code clearly and appropriately, making sure to give details regarding any non-trivial parts of your program.

Submit a .zip or .tar.gz file containing all the files that we would need to compile, run, and test your program. In addition, please submit in the .zip or .tar.gz a README with instructions on how to compile and execute your program as well as any comments and information that you would like us to know.

We ask that you name your .zip or .tar.gz file lastname_firstname_hw7.zip or lastname_firstname_hw7.tar.gz respectively, where you replace the “firstname” and “lastname” fields with your actual first and last name.

Please submit your zip or tar.gz to moodle in the area for Assignment 7.

Original written by Parker Won and Nailen Matschke (Class of 2017).

Modified and adapted by Lokbondo (Loko) Kung (Class of 2018).

Links: Home Assignments Contacts Policies Resources